On a production environment, we have a new Dell Powervault ME5024 and several Synology boxes, running on Ethernet 10GB.

The ESXi cluster has 2 10 GB NICs per host, the Powervault has 8 10GB NICs (4 x 2 on failover) and each Synology has 2 10 GB NICs.

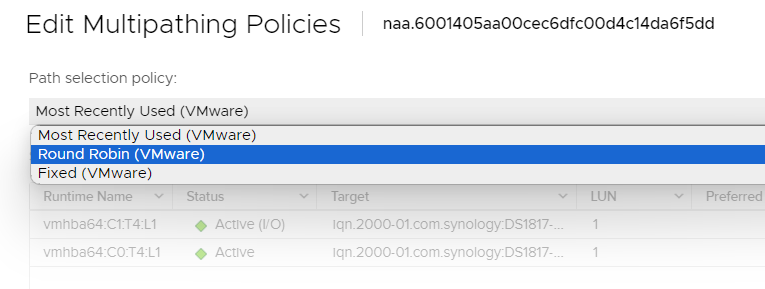

The IT manager from this client configured VMware with iSCSI on all available NICs, but the current configuration (most recently used) only uses 1 NIC to access the datastores on the Synologys.

In order to use all available lanes, and maximize efficiency on iSCSI, we will make some changes to the VMware hosts to use Round Robin, and also to use Round Robin to send 1 SCSI command per lane, instead of the default 1000 per lane at a time.

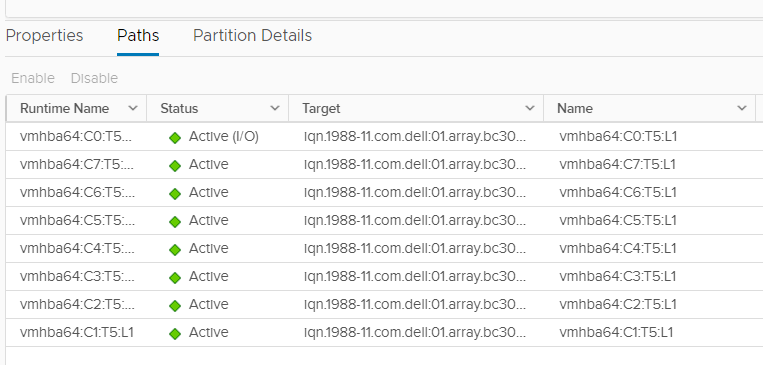

Available paths on Dell ME5024 before Round Robin configuration:

This demo has been done on a 6.7 environment.

To start, enable SSH on the ESXi hosts.

Connect via terminal and execute:

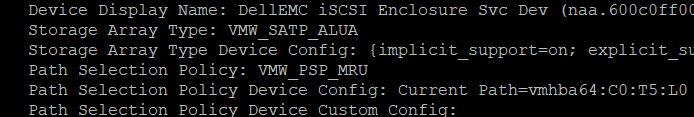

esxcli storage nmp device list

This will list your datastores and their current config

Parth selection policy MRU = Most recently used

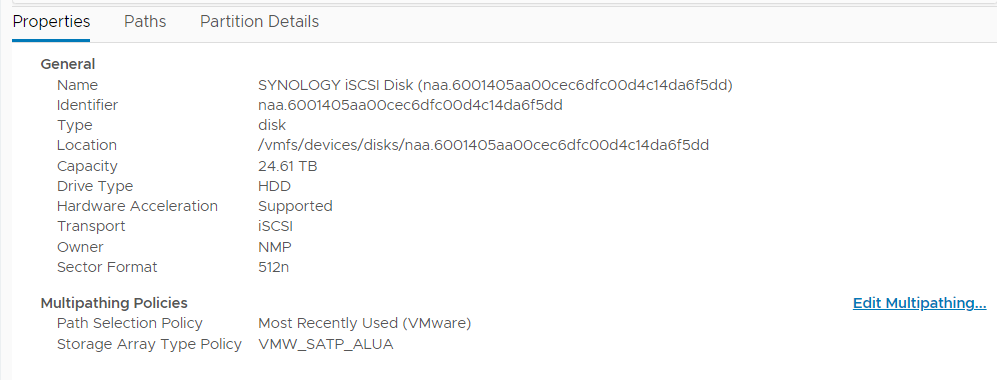

Locate the Datastore via GUI, and edit the Multipathing:

To RR

Execute this command, it will set the IOPS policy to 1.

Replace ‘abc’ to the first letters of your datastores

for i in `esxcfg-scsidevs -c |awk '{print $1}' | grep naa.abc`; do esxcli storage nmp psp roundrobin deviceconfig set --type=iops --iops=1 --device=$i; done

VMware config:

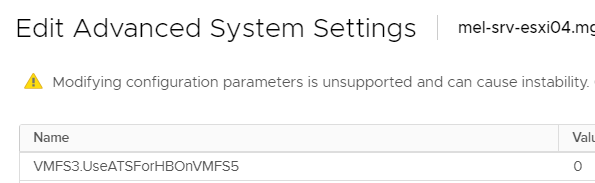

In the web interface on the ESXi host edit the Advanced System Settings. Set the following value:

Property Name: VMFS3.UseATSForHBOnVMFS5

Value: 0

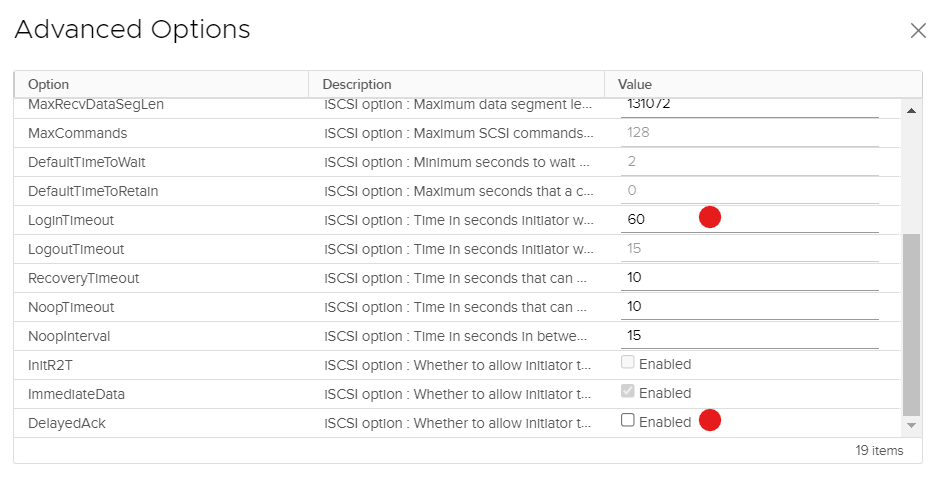

Set this setting on the “Advanced Options” tab:

Login Timeout: 60

Delayed Ack: <UN-CHECKED> //////// Note: Change this setting on a per datastore/SAN approach if you notice a very low I/O performance. I have not rolled this out on this scenario

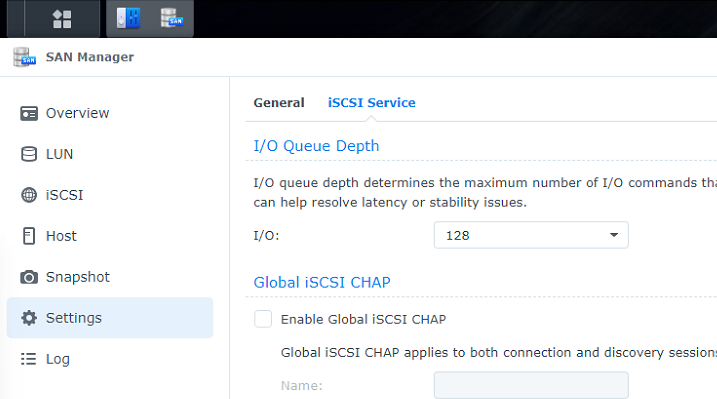

Synology:

Set I/O queue depth to 128